NVIDIA’s GTC 2023 keynote has just ended, with GTC itself ending on March the 23rd – which means an array of exciting new industrial announcements just went live. You can always watch the full keynote at the following link, but we’ve also curated a selection – which you can find in this article – of the most important announcements given during the event.

Without further ado, let’s get to the news!

Industrial and embedded

Starting with the Isaac platform – NVIDIA’s Isaac Sim is now available on Omniverse Cloud for Enterprises, offering a platform-as-a-service solution for computationally intensive tasks, reducing the cost of on-site equipment.

A new Isaac ROS release is also available, focused on AI map orientation, featuring new LIDAR support packages, better Depth Perception and People Detection support and more. Source code has been released for NVIDIA NITROS, a ROS 2 hardware acceleration package. Several hardware acceleration packages have also gotten their open-source releases. Finally, Isaac ROS now has support for Jetson Orin NX and Nano modules.

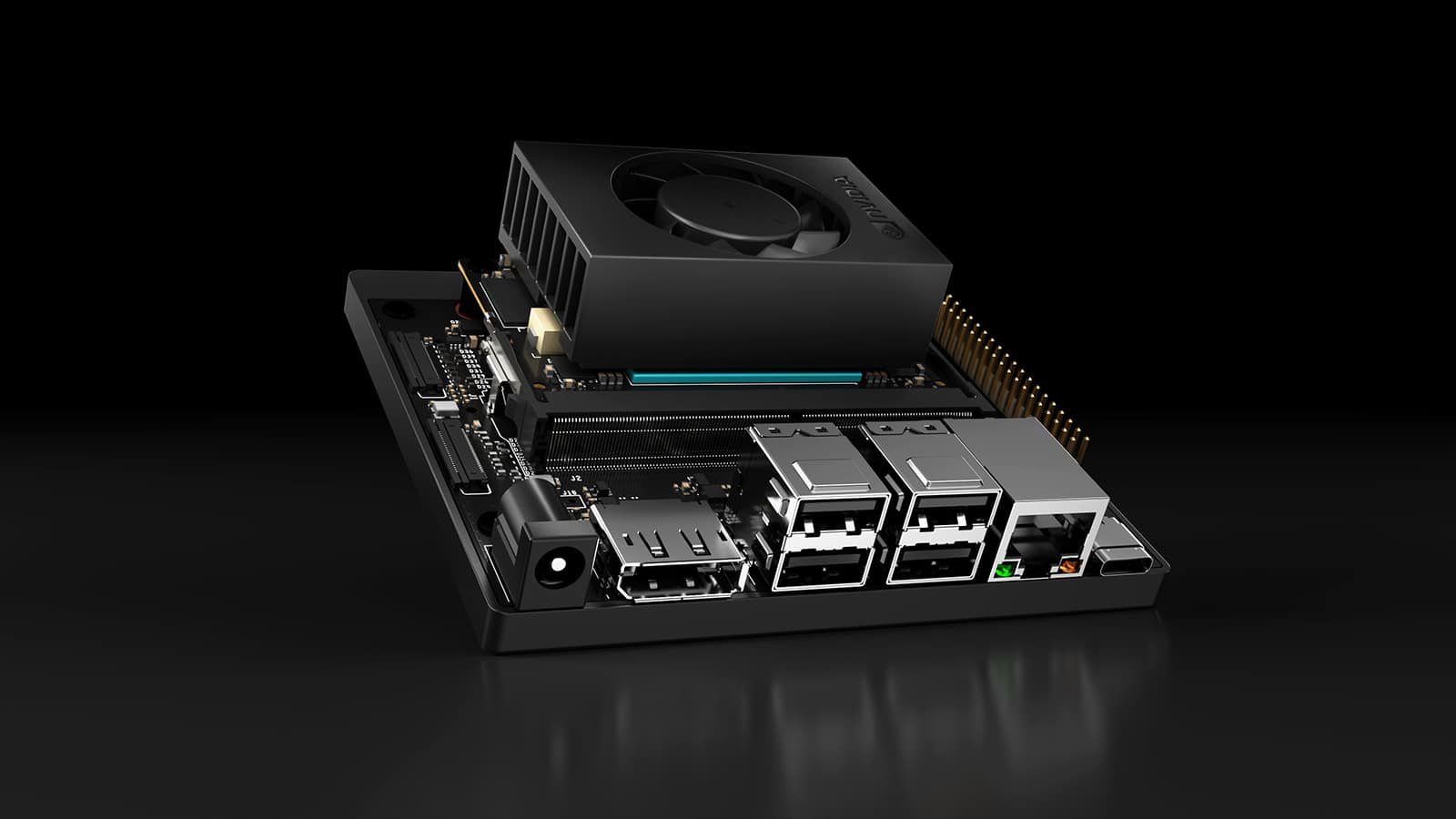

The NVIDIA Jetson Orin series recently got a new member – the Jetson Orin Nano module, offering 40 TOPS of INT8 performance – up to 80x the performance of the original Jetson Nano – at an affordable starting price of $199. The new Orin Nano Developer Kit will feature the higher-end 8GB RAM module on-board and will retail for $499. This new kit supports various AI models and features a hexa-core Arm Cortex-A78AE CPU.

NVIDIA’s Metropolis software framework is also getting an upgrade, with new features focused on accessibility and ease-of-use. TAO 5.0 allows for training of complex AI models without requiring any code, and thanks to ONNX Export functionality, these models can be deployed on almost any device – including CPUs, GPUs, MCUs and DLAs. TAO 5.0 is now source-open, as well.

One of TAO 5.0’s main goals is to enable industry experts whose skills lie outside of the AI realm to contribute to model training and deployment, bringing their expertise into the world of artificial intelligence.

Metropolis Microservices are also being announced, enabling building of cloud-native multi-camera tracking apps. DeepStream now offers better interfaces for LiDAR, Radar and environmental sensors, enabling complex datasets to be acquired and processed.

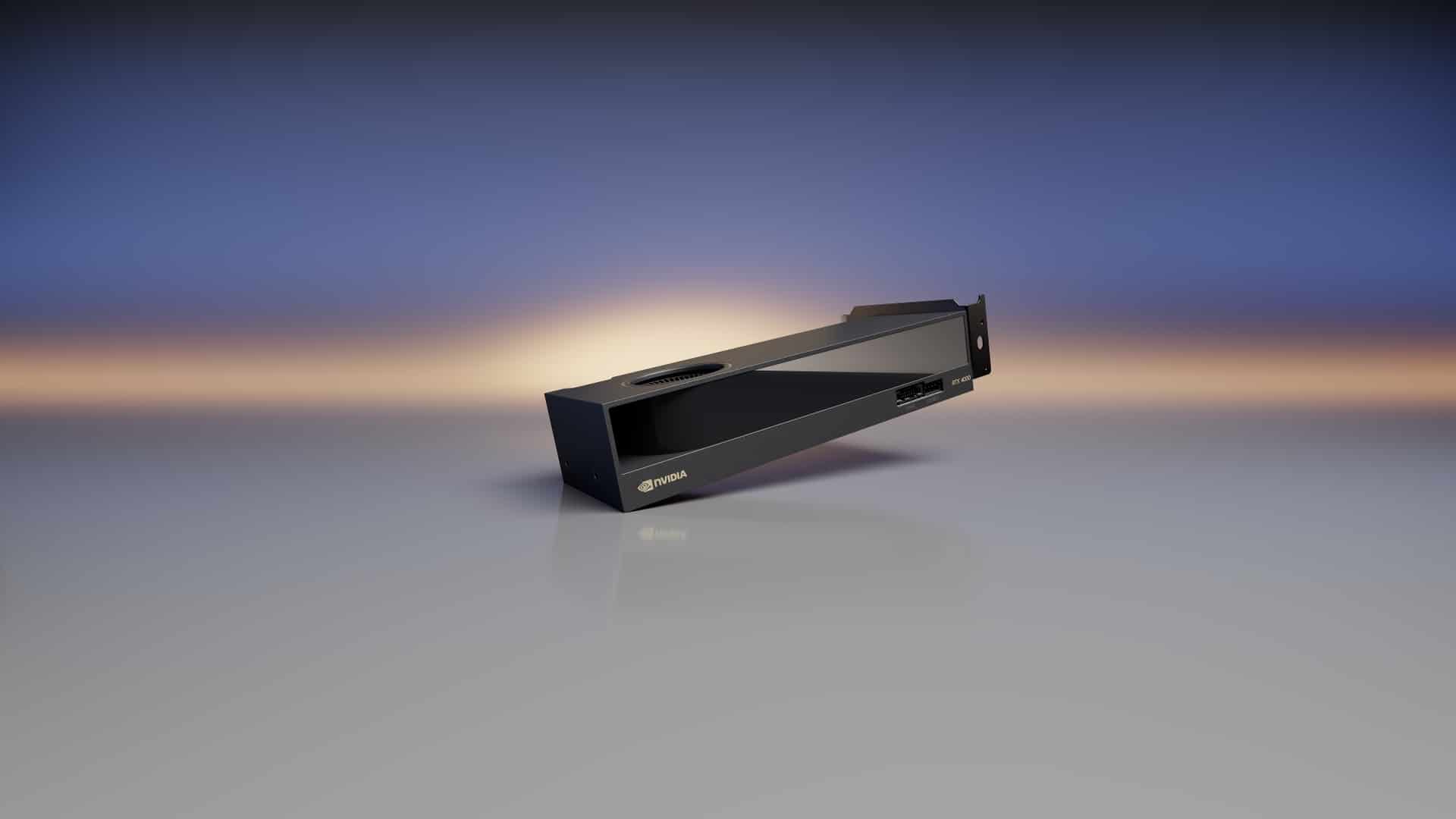

For running Omniverse, three systems are being announced – a new series of RTX 4000 SFF Ada Generation GPU (new small-format workstation flagship GPU)-based workstations built by Boxx, Dell, Hp and Lenovo, new OVX 3.0 servers and the Omniverse cloud.

A new series of laptop professional cards has also been unveiled, based on the Ada architecture, with the flagship RTX 5000 Ada Laptop clocking in at 9728 CUDA and 304 Tensor cores with 16 GB of GDDR6 VRAM.

Computational lithography

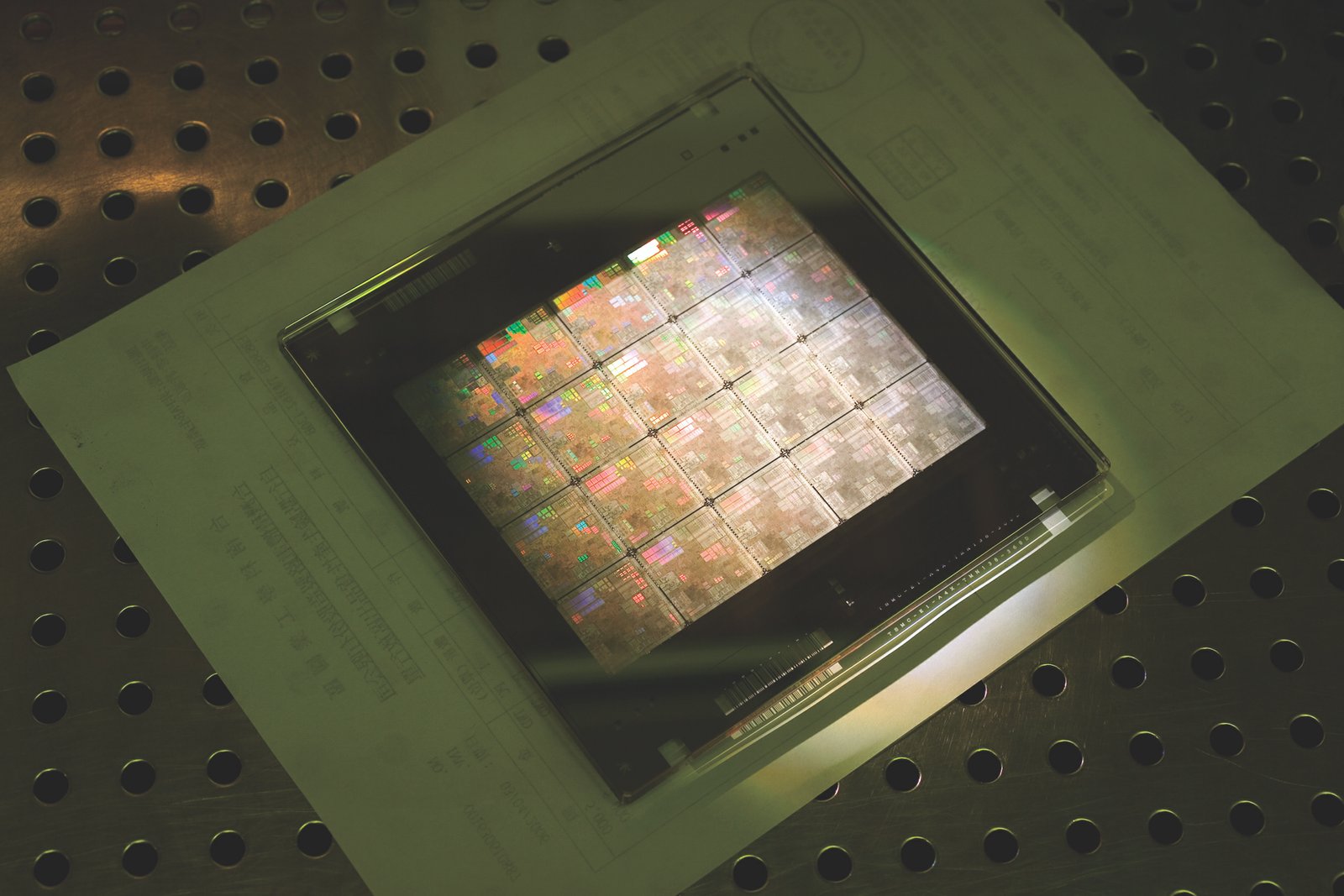

GTC 2023 also brought the announcement of the new cuLitho software library for solving the complex problem of designing inverse lithography masks. Inverse lithography is a necessity at the current silicon scale, but computing the required masks takes significant time. cuLitho leverages GPU acceleration and NVIDIA hardware to speed this operation up. According to NVIDIA: “500 DGX H100 systems do the work of 40,000 CPU systems”, taking up 1/8th of the space and 1/9th of the power of a traditional computational setup. TSMC will start qualifying cuLitho starting in June, and NVIDIA will work with other partners as well in the strive for 2 nm production processes.

Server Hardware

The DGX H100 AI supercomputer is now in production, seemingly due to Intel finally shipping Sapphire Rapids CPUs.

NVIDIA’s 72-ARM core Grace CPU is finally in validation, and will be used in the Grace Superchip, a 144-core module connecting two Grace CPUs, perfect for server use. Grace is so energy efficient that air cooling is enough – two Grace Superchips (a whopping 288 cores total) can fit in an air-cooled 1U server rack, making them some of the most energy-efficient CPUs on the market.

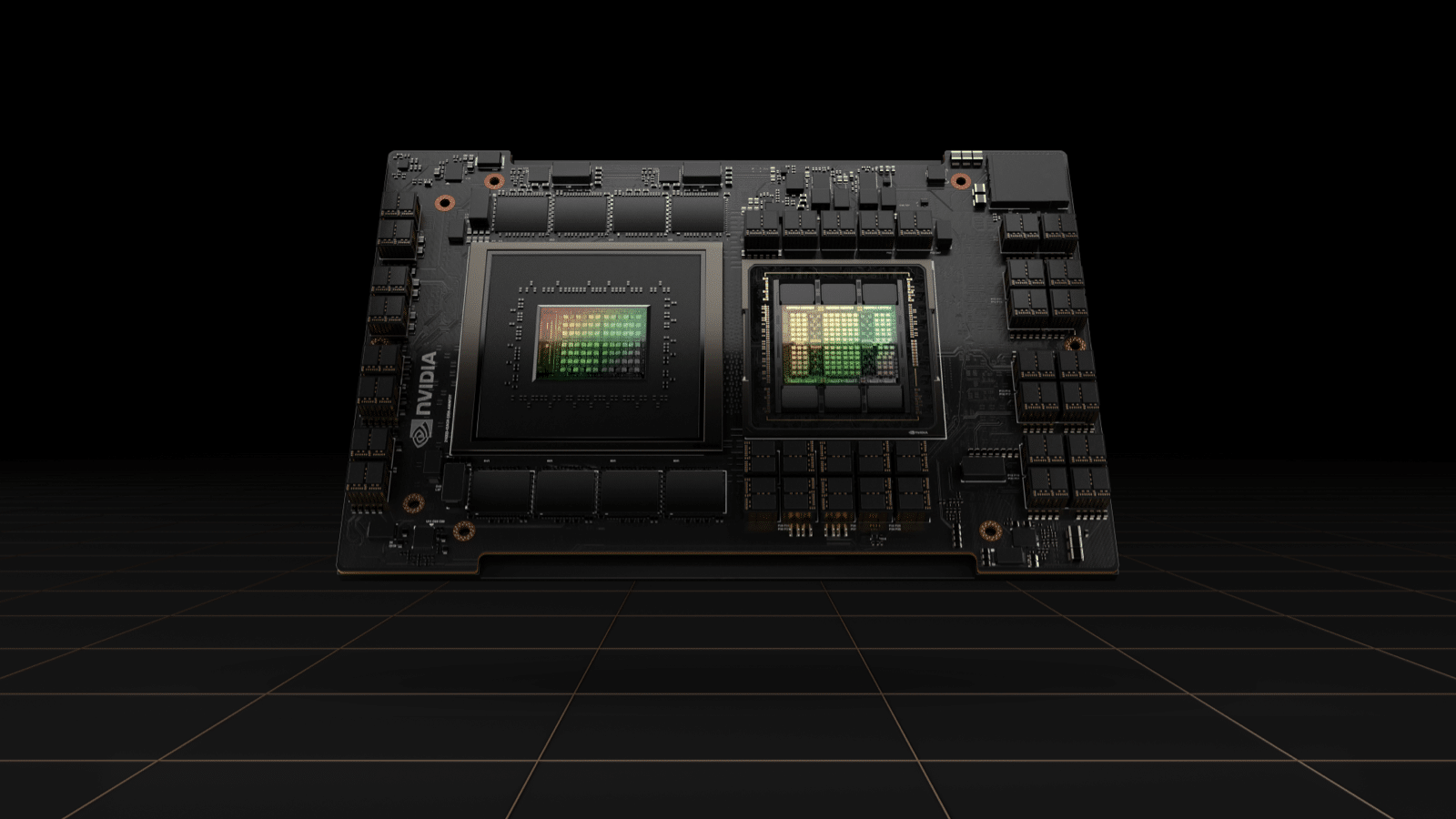

New AI accelerators are also available: the new L4, designed for video editing, automatic content moderation and encoding and decoding, and the H100 NVL, a dual-card accelerator based on two H100 cards linked with NVLink bridges, bringing the best performance yet to language inference models. These new modules sit alongside previously announced L40 image generation card and the Grace Hopper Superchip, which combines the Grace CPU with a Hopper GPU, providing much tighter integration and faster data transfer speeds between the two dies.

An interesting digression about the H100 NVL is that none of the H100 cards in it seem to be binned. Other GH100-based cards, like the original H100 technically have the full 96 GB of HBM on-board (6 stacks of 16 GB each), but the final, sixth stack is always disabled on these due to a low yield of “perfect” chips – making these boards have only 80 GB of RAM. The total of 192 GB means that NVIDIA’s finally found a way to produce non-binned H100 cards with high enough yield – either that or the people training language models are willing to pay a hefty premium for a set of perfect GH100 dies.

For further datacenter optimisation, NVIDIA’s new BlueField 3 DPUs are also in production – offering a major step up compared to their previous DPU.

DGX Cloud and AI Foundations

In a push to cloud-based infrastructure, many of NVIDIA’s services are now available in a HaaS model, including the DGX supercomputer, which can now be used through the cloud. Three new AI “Foundations” are also available – NeMo, Picasso and BioNeMo, for text and image generation and biological research, respectively. These three can be locally hosted on accelerated hardware, but also can be accessed through DGX Cloud at a low entry price for businesses.

These AI foundations can be used as-is or precisely optimised and tuned to a specific business’ needs. These will also be used in cooperation with ShutterStock, Getty and Adobe, amongst others, to provide advanced tools to creatives.

Reclaiming the performance

Reclaiming performance has been a common theme on the GTC 2023 keynote – and a catchphrase used multiple times. Growing demand for big data and ever greater compute power requirements are at odds with the green agenda, forcing innovative acceleration solutions instead of simple scaling. Almost all of the new hardware today – including the Grace CPU, L4 and H100 NVL accelerator and BlueField 3 bring great power saving to data centres. cuLitho significantly optimises several complex processes, as well, featuring similar technology. It seems going green was an underlying theme and one of the motivations for NVIDIA to push accelerated computing further.

And that’s mostly a wrap – we might have missed a point or two, but we tried to list everything announced related to our field. GTC 2023 was filled to the brim with new information – and we definitely recommend watching the GTC 2023 keynote itself!

- LattePanda Mu review - 07/23/2024

- SunFounder Pironman 5 review - 07/11/2024

- Clockwork Pi DevTerm review - 04/24/2024